Let's Build a Duck Detector!

notes for the fast.ai 2020 course

The project that will be completed in this chapter is a duck detector. It will discriminate between three types of duck: white, green head, and rubber ducks.

At the time of writing, Bing Image Search is the best option we know of for finding and downloading images. It's free for up to 1,000 queries per month, and each query can download up to 150 images.

key = os.environ.get('AZURE_SEARCH_KEY', 'XXX')

Once you've set key, you can use search_images_bing. This function is provided by the small utils class included with the notebooks online. If you're not sure where a function is defined, you can just type it in your notebook to find out:

search_images_bing

results = search_images_bing(key, 'duck')

ims = results.attrgot('contentUrl')

len(ims)

We've successfully downloaded the URLs of 150 ducks (or, at least, images that Bing Image Search finds for that search term).

dest = 'gdrive/MyDrive/images/green head.jpg'

download_url(ims[0], dest)

im = Image.open(dest)

im.to_thumb(128,128)

path = Path('gdrive/MyDrive/images/pedo bear')

if not path.exists():

path.mkdir()

path.mkdir(exist_ok=True)

results = search_images_bing(key, 'pedo bear')

download_images(path, urls=results.attrgot('contentUrl'))

Our folder has image files, as we'd expect:

fns = get_image_files(path)

fns

This seems to have worked nicely, so let's use fastai's download_images to download all the URLs for each of our search terms. We'll put each in a separate folder:

duck_types = 'white', 'green head', 'rubber'

path = Path('gdrive/MyDrive/images/ducks')

if not path.exists():

path.mkdir()

for o in duck_types:

dest = (path/o)

dest.mkdir(exist_ok=True)

results = search_images_bing(key, f'{o} duck')

download_images(dest, urls=results.attrgot('contentUrl'))

fns = get_image_files(path)

fns

Often when we download files from the internet, there are a few that are corrupt. Let's check:

path = Path('gdrive/MyDrive/images/ducks')

failed = verify_images(fns)

failed

To remove all the failed images, you can use unlink on each of them. Note that, like most fastai functions that return a collection, verify_images returns an object of type L, which includes the map method. This calls the passed function on each element of the collection:

failed.map(Path.unlink)

From Data to DataLoaders

We need to tell fastai at least four things:

- What kinds of data we are working with

- How to get the list of items

- How to label these items

- How to create the validation set

With data block API you can fully customize every stage of the creation of your DataLoaders. Here is what we need to create a DataLoaders for the dataset that we just downloaded:

ducks = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=parent_label,

item_tfms=Resize(128))

DataBlock is like a template for creating a DataLoaders. We still need to tell fastai the actual source of our data—in this case, the path where the images can be found:

dls = ducks.dataloaders(path)

dls.valid.show_batch(max_n=4, nrows=1)

ducks = ducks.new(item_tfms=Resize(224), batch_tfms=aug_transforms(mult=2))

dls = ducks.dataloaders(path)

dls.train.show_batch(max_n=8, nrows=2, unique=True)

learn = cnn_learner(dls, resnet18, metrics=accuracy)

learn.fine_tune(4)

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

learn.export()

path = Path()

path.ls(file_exts='.pkl')

%mv export.pkl gdrive/MyDrive/

learn_inf = load_learner(path/'gdrive/MyDrive/export.pkl')

learn_inf.dls.vocab

btn_upload = widgets.FileUpload()

btn_upload

img = PILImage.create(btn_upload.data[-1])

img

We can use an Output widget to display it:

out_pl = widgets.Output()

out_pl.clear_output()

with out_pl: display(img.to_thumb(128,128))

out_pl

Then we can get our predictions:

pred,pred_idx,probs = learn_inf.predict(img)

and use a Label to display them:

lbl_pred = widgets.Label()

lbl_pred.value = f'Prediction: {pred}; Probability: {probs[pred_idx]:.04f}'

lbl_pred

Label(value='Prediction: white; Probability: 1.0000')

We'll need a button to do the classification. It looks exactly like the upload button:

btn_run = widgets.Button(description='Classify')

btn_run

We'll also need a click event handler; that is, a function that will be called when it's pressed.

def on_click_classify(change):

img = PILImage.create(btn_upload.data[-1])

out_pl.clear_output()

with out_pl: display(img.to_thumb(128,128))

pred,pred_idx,probs = learn_inf.predict(img)

lbl_pred.value = f'Prediction: {pred}; Probability: {probs[pred_idx]:.04f}'

btn_run.on_click(on_click_classify)

You can test the button now by pressing it, and you should see the image and predictions update automatically!

We can now put them all in a vertical box (VBox) to complete our GUI:

VBox([widgets.Label('Select your duck!'),

btn_upload, btn_run, out_pl, lbl_pred])

DeployingYour Notebook into a Real App

For at least the initial prototype of your application, and for any hobby projects that you want to show off, you can easily host them for free.

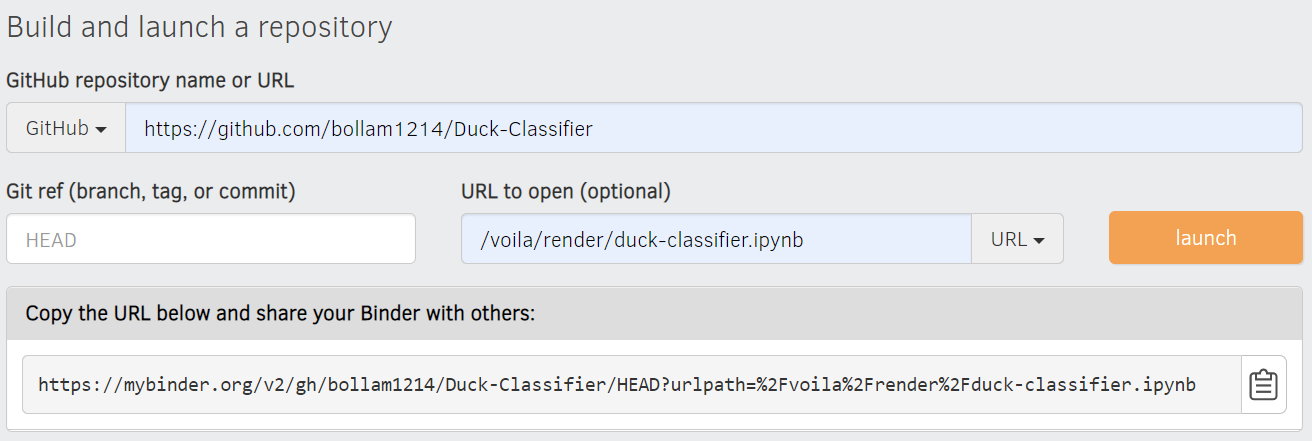

In early 2020 the simplest (and free!) approach is to use Binder.

Get Writing!

Rachel Thomas, cofounder of fast.ai, wrote in the article "Why You (Yes, You) Should Blog":

The top advice I would give my younger self would be to start blogging sooner. Here are some reasons to blog:

- It’s like a resume, only better. I know of a few people who have had blog posts lead to job offers!

- Helps you learn. Organizing knowledge always helps me synthesize my own ideas. One of the tests of whether you understand something is whether you can explain it to someone else. A blog post is a great way to do that.

- I’ve gotten invitations to conferences and invitations to speak from my blog posts. I was invited to the TensorFlow Dev Summit (which was awesome!) for writing a blog post about how I don’t like TensorFlow.

- Meet new people. I’ve met several people who have responded to blog posts I wrote.

- Saves time. Any time you answer a question multiple times through email, you should turn it into a blog post, which makes it easier for you to share the next time someone asks.

Perhaps her most important tip is this:

You are best positioned to help people one step behind you. The material is still fresh in your mind. Many experts have forgotten what it was like to be a beginner (or an intermediate) and have forgotten why the topic is hard to understand when you first hear it. The context of your particular background, your particular style, and your knowledge level will give a different twist to what you’re writing about.

The full details on how to set up a blog are in fastpages. If you don't have a blog already, take a look at that now, because we've got a really great approach set up for you to start blogging for free, with no ads—and you can even use Jupyter Notebook!